This website stores cookies on your computer.

These cookies are used to collect information about how you interact with our website and allow us to remember you. We use this information in order to improve and customize your browsing experience and for analytics and metrics about our visitors both on this website and other media. To find out more about the cookies we use, see our Privacy Policy.

SaaS Management: A Simple Framework to Streamline Your Application Environment

Data Center AI Planning

A Roadmap to AI-ready Data Center Technology

AI workloads demand more from data center infrastructure than traditional applications. With training and inference pushing limits on power, cooling, storage, and the network, models are larger, GPU racks denser, and east-west traffic higher. To deliver faster training cycles, stable inference latency, and controlled spend while managing risk, you need a clear, phased plan.

This guide explains the core building blocks of an AI-ready data center and gives you a step-by-step plan from pilot to scale, with milestones, decision checklists, and cost and performance targets so you can build a powerful and cost-effective AI environment at scale.

The challenge intensifies as clusters grow from pilots to production, where the building blocks must scale without disrupting existing operations.

This guide explains the core building blocks of an AI-ready data center and gives you a step-by-step plan from pilot to scale, with milestones, decision checklists, and cost and performance targets so you can build a powerful and cost-effective AI environment at scale.

GPU Clusters and Capacity Planning for an AI‑ready Data Center

AI compute has unique requirements. Training jobs, for example, run for extended periods and require sustained compute, storage, and network throughput. Inference workloads behave differently: they tend to be bursty and more sensitive to latency. Both rely on GPUs and accelerators that run at high power density, which means facilities need careful planning across electrical, mechanical, and physical domains.The challenge intensifies as clusters grow from pilots to production, where the building blocks must scale without disrupting existing operations.

Top Considerations for Building an AI-ready Data Center

- Workload characteristics and planning: Classify your training and inference patterns to correctly size your environment for both steady and spikey demands. Set clear targets for performance, like throughput and latency, while leaving ample headroom for future growth.

- Power and redundancy: Confirm your service has the capacity for high per-rack power draws (15-30kW+). Select a redundancy model (N+1 or 2N) and ensure your UPS and generators can handle spiky AI loads.

- Advanced cooling: Choose a liquid cooling option that fits your operations. Validate it can handle variable AI loads and use real-time controls to tune performance.

- Density, floor planning, and racks: Plan rack counts, weight, and service clearances for your floor layout. Choose deeper racks (≥1200 mm) so GPU servers, cabling bend radius, and cooling manifolds fit without blocking airflow.

- Network and fabric hooks: Select a high-performance fabric, like InfiniBand or RDMA over Ethernet, and pre-provision all necessary ports and cabling with significant headroom for future growth.

- Modular growth model: Standardize your infrastructure into repeatable building blocks. Pre-provisioning power, cooling, and fabric capacity allows you to add resources incrementally without disruption.

- Monitoring and management: Use DCIM and runtime telemetry to track power, thermals, and device health in real time. Analyzing this data allows you to proactively spot issues and continuously optimize your operations.

Quick Checklist

✔ Define workload profiles and set throughput/latency targets

✔ Verify power capacity at rack, row, and facility levels

✔ Select cooling approach and validate under AI load patterns

✔ Plan rack depth, weight limits, and service clearances

✔ Pre-provision network capacity for future growth

✔ Implement runtime monitoring and trending

Watch Out For

✘ Don’t underestimate heat rejection for dense GPU trays or ignore rack depth

✘ Avoid treating pilots like permanent architectures and locking into limits too early

✘ Don’t scale compute before upgrading power distribution, cooling controls, or network fabric

✘ Don’t skip runtime monitoring and trending for power and thermal behavior

Network Requirements for AI Data Center Workloads

The demands AI places on a network are driven by two distinct, high-stakes patterns. On one hand, model training generates intense microbursts and heavy east-west traffic across storage and compute. On the other, inference requires consistently low-latency delivery for real-time results.Despite these different needs, both demand a network with proper bandwidth and precise congestion control to function without blockers.

Design Around These Core Technologies

Building a network that can handle these unique demands starts with putting the right foundational technologies in place. Let’s look at the essential components:- High‑bandwidth fabrics: Use InfiniBand for tightly coupled training clusters or Ethernet with RDMA (Remote Direct Memory Access) like RoCE (RDMA over Converged Ethernet) or iWARP (Internet Wide Area RDMA Protocol) for flexible, standards‑based designs.

- Quality of Service (QoS) and Explicit Congestion Notification (ECN): Classify traffic, apply priority queuing, and use ECN, which flags congestion before packets are dropped to maintain low latency, to keep latency predictable.

- Loss management for RDMA: RDMA (Remote Direct Memory Access) lets servers move data directly between memory for reduced latency and CPU load. Prefer ECN with DCTCP for proactive congestion control and shallow queues. Use PFC only where necessary, and if enabled, scope it to RDMA classes and monitor for head-of-line blocking.

- Telemetry and observability: Enable real‑time fabric telemetry so you can detect microbursts and tune queues.

- Modular spine‑and‑leaf capacity: Pre‑provision spine stages, structured cabling, and optics so you can add leaf switches and spines without downtime.

Practical Guidance

Having the right technology, however, is only part of the equation. To get the performance you need, it’s crucial to configure and manage it correctly. Here’s how to put those technologies to work effectively:- Define separate QoS (Quality of Service) profiles for training and inference so bursty training traffic doesn’t starve latency-sensitive inference. Keep one path MTU (Maximum Transmission Unit) end-to-end across NIC (Network Interface Card), ToR (Top-of-Rack), and spine to prevent fragmentation/retransmits that push up p99 latency.

- Align oversubscription to workload and storage locality. Keep headroom so typical utilization stays below target thresholds and p99 latency stays within budget.

- Set capacity triggers for expansion so you add links or spine stages before users feel slowdowns. Define hard thresholds such as oversubscription above target, sustained buffer occupancy (e.g., > X% for Y minutes), or p95/p99 latency over budget, and alert on them to prompt planned upgrades during a change window.

- Run acceptance tests before go-live and after changes so you verify the fabric still meets targets and catch regressions early. Include line-rate with mixed flow sizes, microburst tests, and failover, and record baseline throughput and latency to compare after every change.

One Network, Two Demands: AI networks handle two different jobs at once: the heavy, spikey traffic of model training and the fast, stable needs of real-time inference. Planning for both is essential.

AI Storage and Data Pipelines for Training and Inference

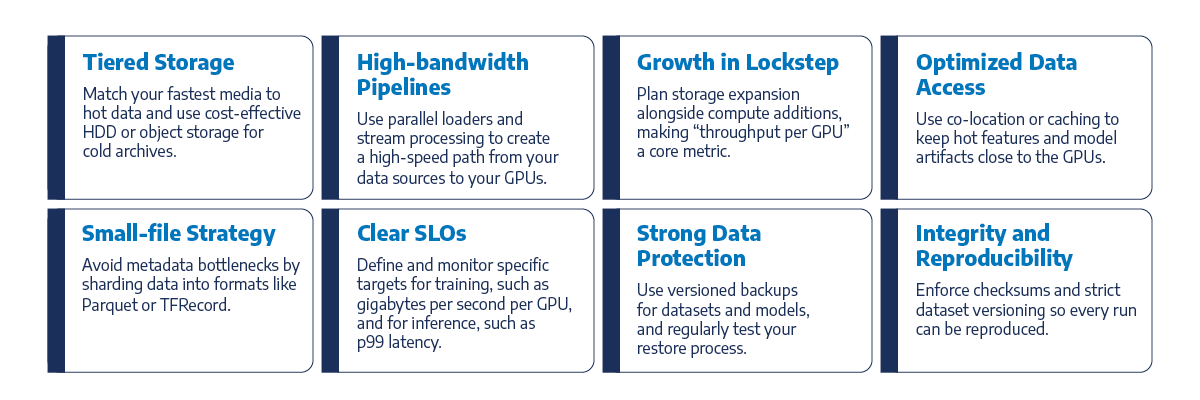

AI storage serves two purposes: to feed training with high-throughput, parallel streams and to deliver fast, consistent reads for inference. If ingest, preprocessing, and feature pipelines aren’t engineered with storage, they become the bottleneck.Best Practices for AI Storage

A successful storage strategy addresses these challenges head-on. Here are the key practices to implement:

Key Insight: Fast storage is not enough. To truly feed your GPUs, you need to build a high-bandwidth data pipeline to match.

AI Data Center Security and Compliance

Integrating AI into your data center fundamentally changes your security posture. Beyond traditional threats, you now face risks to model integrity and sensitive data flows, along with new potential attack vectors in your MLOps pipelines and inference servers.All these new considerations must be addressed within the strict boundaries of regulatory frameworks such as GDPR, HIPAA, or PCI-DSS, making a proactive strategy essential.

Core Controls

A strong security posture for AI starts with a foundation of essential technical controls. These measures are designed to protect your data, models, and infrastructure from the ground up:Readiness Activities

Technology alone isn’t enough. Proactive readiness activities ensure your controls are effective and your team is prepared to respond when an incident occurs:

Conduct Regular Security Assessments

Routinely scan all endpoints, hosts, and network devices for vulnerabilities and misconfigurations.

Map Controls to Compliance Frameworks

Document how your data security measures satisfy the requirements of regulations that apply to your business.

Build and Test Incident Response Plans

Create runbooks for specific AI threats like model tampering or data exposure, and test them with quarterly tabletop exercises.

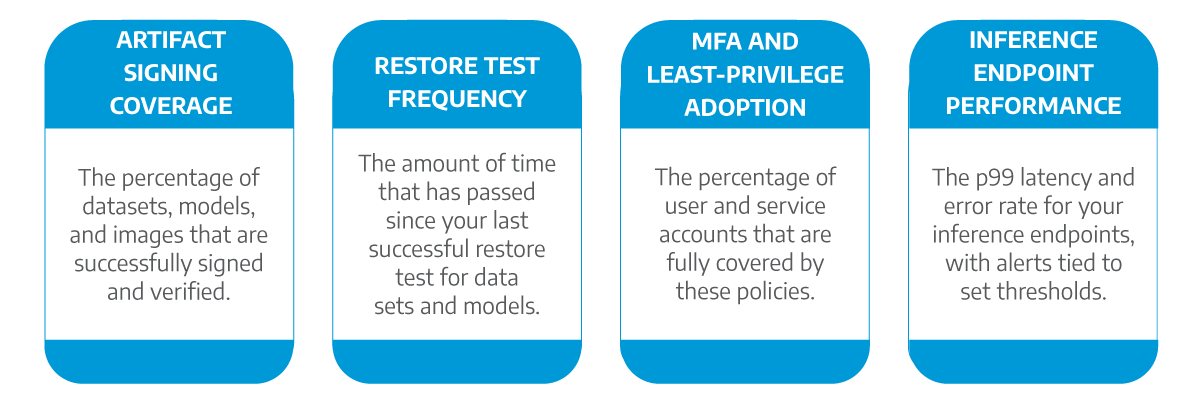

KPIs to Track

To ensure your security program stays effective, track key performance indicators (KPIs) that measure its health and maturity. Focus on metrics like:

Tip: Gate every model and dataset promotion with signature verification and keep immutable logs. Pair this with a quarterly restore drill. These two steps catch most integrity issues early and prove compliance with minimal overhead.

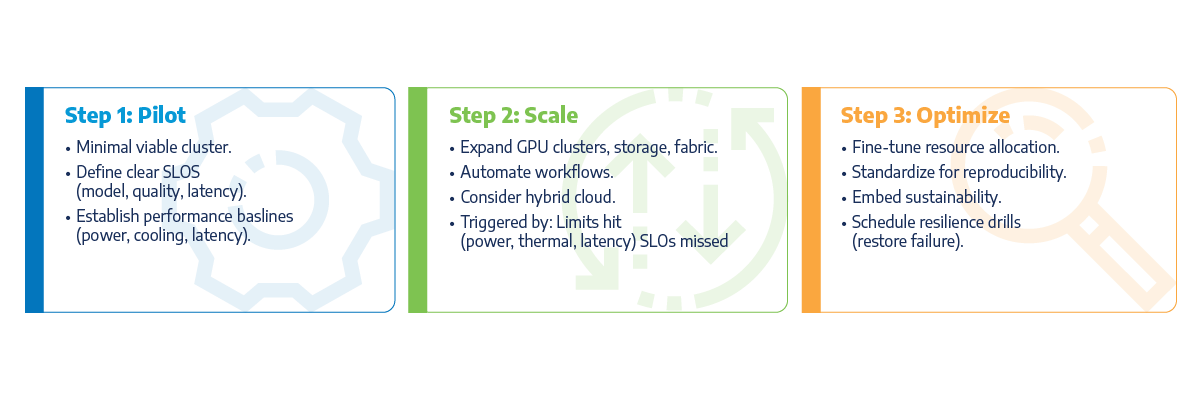

Pilot, Scale, and Optimize with a Practical AI Data Center Roadmap

Preparing for production-level AI is not optional, and a solid plan is the best way to manage performance, control spending, and meet your security needs. This means moving from pilot to production with clear success metrics, strict operational guardrails, and a smart plan to scale.

Step 1: Pilot

The pilot phase is about starting small to learn fast. The goal is to prove value on a limited scale while establishing the technical baselines and operational discipline you'll need for future growth.- Define clear success metrics: Focus on one or two contained use cases and establish hard SLOs from the start, such as model quality, p99 latency, and job throughput.

- Build a minimal viable cluster: Assemble the core compute, storage, and network fabric, then run acceptance tests to validate performance under real-world conditions like thermal stress and network microbursts.

- Establish your baseline: Document data governance and protection rules early. Measure initial power, cooling, and performance so you have a clear reference point for measuring success later.

Step 2: Scale

Once your pilot has proven its value, the next step is to scale your infrastructure and automate your processes to support broader adoption.- Expand capacity and automate workflows: Methodically grow your GPU clusters, storage, and network fabric while automating key operational workflows like provisioning and change management.

- Consider a hybrid strategy: Evaluate multicloud or hybrid cloud options if they can improve your cost structure, reduce latency, or increase overall resilience.

- Scale with data-driven triggers: Know precisely when to add capacity by monitoring for specific events, such as:

- Rack power or thermal limits are consistently being hit

- Network latency or oversubscription exceeds your defined budget

- Storage throughput per GPU drops below your target

- Job queue wait times regularly miss your SLOs

Step 3: Optimize

With a scaled environment in place, the focus shifts to refinement by improving efficiency, ensuring reproducibility, and embedding long-term sustainability into your operations.- Fine-tune resource allocation: Right-size your compute pools and adjust headroom to eliminate waste and maximize utilization.

- Standardize for reproducibility: Improve reliability by standardizing on “golden” images and configuration baselines for all components.

- Embed sustainability and resilience: Integrate metrics like power efficiency into your reporting, and schedule regular operational drills like restore tests and failure simulations.

Checkpoints and Runbooks

Create clear operational safety nets to maintain stability as your environment evolves. Start by documenting rollback procedures for driver, firmware, and network changes (who does what, in what order, and where to find backups), then automate acceptance tests and run them after every change window—recording baseline throughput and latency—so you can spot regressions quickly and roll back with confidence.

Make Sure Your Data Center Is AI-ready

Turning an AI pilot into a production system requires a clear plan. Without one, you risk hitting walls with power, networking, and security that stall progress and inflate costs.A successful rollout depends on a disciplined blueprint. It means creating a phased roadmap with clear triggers for scaling, standardizing your infrastructure into repeatable building blocks, and using telemetry to spot issues before they impact performance.

Connection helps you build and operate your AI-ready data center roadmap. We partner with you at every stage to help you build a solid foundation for scalable AI readiness:

- Assess and plan: We start by analyzing your current data center, cloud, and security posture to build a clear, actionable roadmap with predictable costs and timelines.

- Design and build: From GPU clusters and high-speed networks to tiered storage and advanced liquid cooling, we plan, design, and implement the AI infrastructure you need.

- Migrate and optimize: We manage the entire migration and consolidation process for you, then implement automation and proactive health checks to ensure your environment is always running at peak performance.

- Secure and operate: Our managed security services handle everything from threat monitoring and compliance to cloud operations, giving you steady performance and predictable spending.

- Edge and embedded AI: When models must run on devices or at the edge, we assess requirements, select CPU, GPU, or NPU hardware, align data orchestration, and accelerate pilots.

Data Center Transformation Services

Artificial Intelligence for IT Operations (AIOps)

AIOps enhances data center efficiency by automating performance monitoring, anomaly detection, and root cause analysis. It leverages machine learning to optimize resource usage, predict failures, and streamline incident response - reducing downtime and improving scalability across complex infrastructure environments.